- April 25, 2025

- Abi Therala

- 0

MCP vs A2A: What Google and Anthropic’s Protocols Mean for Your Projects

Google’s announcement of the Agent-to-Agent (A2A) protocol in April 2025, backed by over 50 tech giants including Atlassian, Salesforce, and PayPal, has sparked intense discussions about MCP vs A2A protocols in the AI community. While both protocols aim to enhance AI capabilities, they serve distinctly different purposes in the evolving landscape of artificial intelligence.

In fact, these two protocols address critical challenges in modern AI systems. The Model Context Protocol (MCP) by Anthropic focuses on providing structured context to large language models, while A2A enables direct communication between AI agents across different platforms. Together, they’re shaping how AI agents interact, share information, and coordinate tasks.

We’ll explore the key differences between these protocols, their specific use cases, and how they can work together to create more capable AI applications. Whether you’re building AI solutions or planning to integrate them into your projects, understanding these protocols is crucial for making informed decisions about your AI architecture.

The Rise of Agentic AI and the Need for Protocols

As AI capabilities have advanced, traditional single-agent AI systems have revealed significant limitations that hinder their effectiveness in complex environments. This shortcoming has fueled the development of specialized communication protocols like mcp vs a2a, each designed to address different aspects of agent collaboration.

Why solo agents fall short in modern workflows

Traditional AI agents operate effectively in isolated, straightforward scenarios but struggle with multifaceted tasks that require coordinated efforts. Standalone LLMs typically have limited ability to understand multistep prompts—much less plan and execute complete workflows from a single request. Furthermore, solo agents often face challenges with context management, frequently “losing the plot” midway through complex processes.

The limitations become particularly evident in enterprise environments where AI needs to handle intricate workflows spanning multiple tools, datasets, and domains. According to research, over 72% of organizations have adopted AI in some form, yet most implementations rely on isolated agents that can’t effectively collaborate or share knowledge.

Consequently, solo agents struggle with maintaining contextual awareness over extended interactions, making dynamic decisions in changing environments, and adapting strategies based on outcomes. These limitations have pushed developers toward more collaborative approaches that mirror human teamwork.

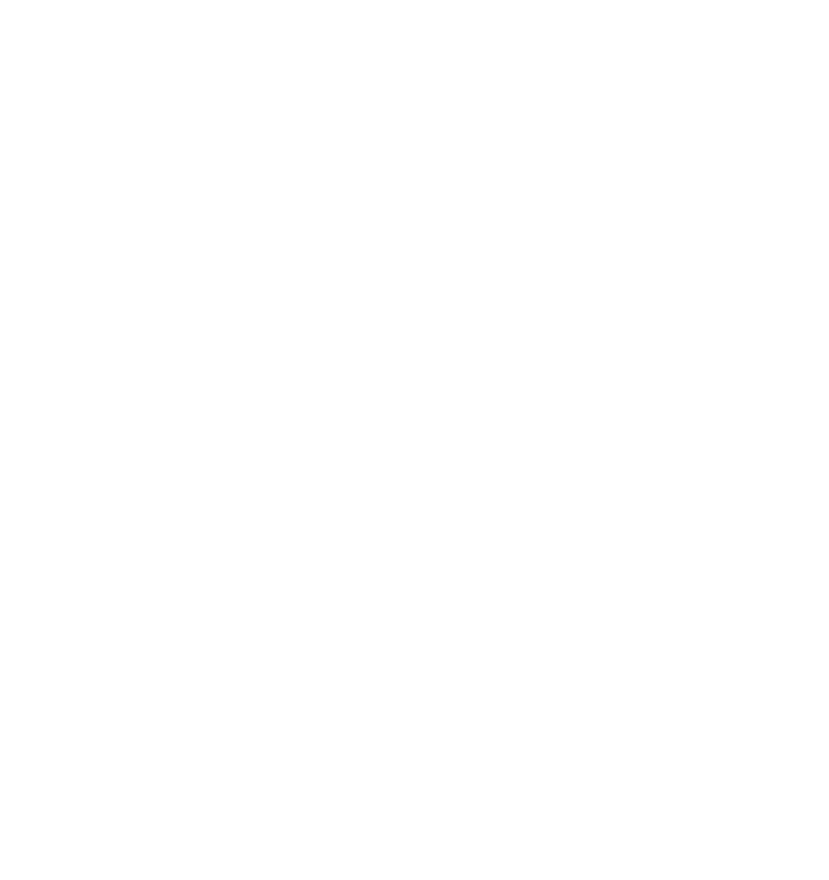

The emergence of multi-agent systems

Multi-agent systems (MAS) consist of multiple AI agents working collectively to perform tasks that would overwhelm any individual agent. Initially, these systems emerged to address the scalability and complexity challenges faced by solo agents, offering a more resilient and adaptable approach to problem-solving.

The collective behavior of multiagent systems significantly increases the potential for accuracy, adaptability, and scalability. Indeed, multi-agent frameworks tend to outperform singular agents because they provide more action plans, enabling greater learning and reflection.

Multi-agent systems can operate under various architectures. In centralized networks, a central unit contains the global knowledge base and oversees all agent operations. Alternatively, decentralized networks allow agents to share information directly with neighboring agents, creating more robust systems where the failure of one component does not cause the entire system to fail.

To maximize benefits from agentic AI, these agents must be able to collaborate in a dynamic ecosystem across siloed data systems and applications. This necessity has spurred the development of specialized protocols—specifically mcp 1 and a2a—that establish standardized methods for agent-to-agent communication and tool orchestration.

MCP and A2A: Protocols Shaping the Agent Ecosystem

The battle for AI protocol dominance has accelerated with two major competitors entering the arena. These protocols are establishing the foundation for how AI systems will collaborate, communicate, and function in complex workflows.

MCP and its role in tool orchestration

Introduced by Anthropic in November 2024, the Model Context Protocol (MCP) standardizes how applications provide context to Large Language Models (LLMs). Think of MCP as an “AI USB port” – a universal interface enabling seamless integration between language models and external systems. Essentially, it creates a standardized pathway for LLMs to access data sources, tools, and services.

MCP follows a client-server architecture where:

- MCP Hosts (like Claude Desktop or IDE tools) access data through MCP

- MCP Servers expose specific capabilities through the protocol

- MCP Clients maintain connections with servers, allowing LLMs to retrieve data and perform actions

Through this architecture, MCP solves the “M×N integration problem” – the exponential complexity of connecting multiple AI models to numerous tools or data sources.

What is A2A and how it enables agent-to-agent messaging

Alternatively, Agent-to-Agent (A2A) protocol, developed by Google DeepMind, enables direct communication between independent AI agents. A2A primarily focuses on agent-to-agent interaction rather than tool access.

The protocol facilitates communication between a “client” agent (that formulates tasks) and a “remote” agent (that executes those tasks). This task-oriented approach allows agents to work collaboratively toward fulfilling user requests.

Nevertheless, Google has carefully positioned A2A as complementary to MCP, stating, “A2A is an open protocol that complements Anthropic’s MCP, which provides helpful tools and context to agents.”

How agent cards enable discovery and trust

A2A’s core strength lies in its discovery mechanism through Agent Cards. These are public metadata files (typically at /.well-known/agent.json) that describe an agent’s capabilities, skills, endpoint URL, and authentication requirements.

Agent Cards function like digital business cards, enabling client agents to:

- Identify agents with specific capabilities

- Review potential collaborators without setup

- Understand authentication needs and schemes

- Determine default input/output formats

This discovery system allows agent ecosystems to evolve naturally without hard-coded connections, making A2A particularly valuable for building dynamic, collaborative AI systems.

MCP vs A2A comparison: Architecture, Roles, and Flow

When examining the underlying architecture of these protocols, fundamental differences emerge in how they approach agent communication and task execution.

Differences in communication models

The Model Context Protocol (MCP) operates on a client-server architecture with three distinct components working in harmony. MCP Hosts (like Claude Desktop) act as central coordinators, managing client instances and enforcing security policies. MCP Clients establish one-on-one connections with servers, creating stateful sessions and routing messages. Finally, MCP Servers expose specific capabilities by wrapping external resources, APIs, or tools.

In contrast, A2A builds everything around tasks—specific units with unique IDs that move through defined states: submitted → working → input-required → completed/failed/canceled. This task-based approach excels in complex interactions that involve multiple stages and uncertain outcomes.

Context injection vs task-based messaging

MCP focuses primarily on Context Injection—providing models with relevant information at the precise moment they need it. Instead of tracking task states, MCP structures everything around three core elements:

- Tools (functions enabling models to take action)

- Resources (organized data providing context)

- Prompts (ready-to-use templates)

Conversely, A2A employs a completely different approach centered on natural language for agent communication, making it ideal for teamwork scenarios requiring flexibility. The protocol meticulously tracks state changes and delivers status updates through polling, streaming, or notifications.

How each protocol handles data, tools, and results

A2A uses Artifacts as containers for task outputs. These artifacts package complete, self-contained results from remote agents’ work, potentially including text explanations, images, and structured JSON—all neatly organized with clear content types.

Meanwhile, MCP employs Resources as context elements that clients can access. Unlike A2A’s task-focused artifacts, MCP resources represent existing data providing context to language models. Each resource has its own URI, accessible through standard endpoints.

This fundamental difference highlights their complementary nature: A2A excels at packaging multimodal results between independent agents, whereas MCP specializes in providing structured context to language models within a system.

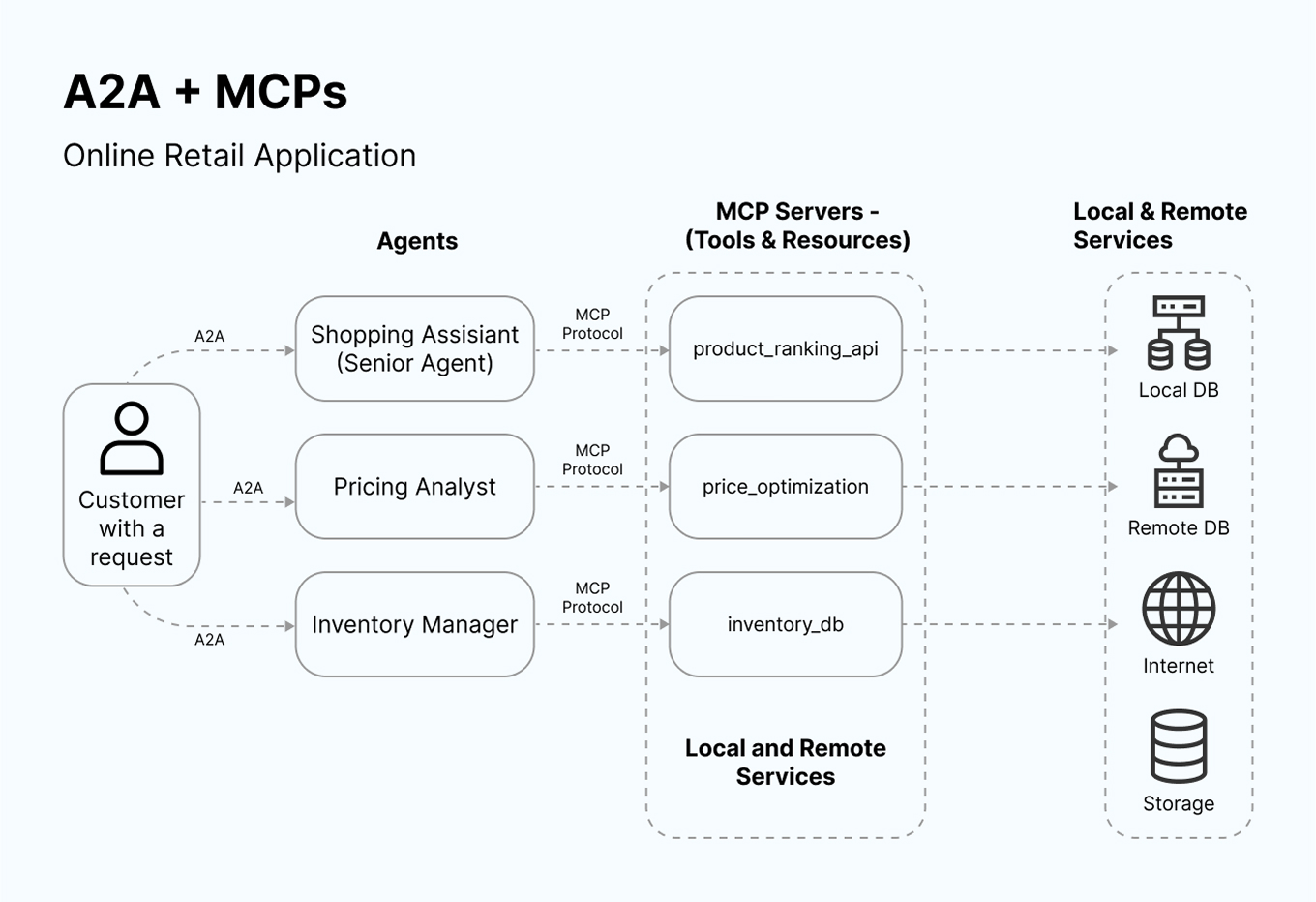

To truly grasp how MCP and A2A protocols revolutionize agentic systems, let’s walk through a retail scenario—a real-world metaphor visualized in the diagram above.

The Setup

A customer enters an e-commerce platform with a query: “Find me the best wireless earbuds under $150, available to ship today.”

Instead of a single AI trying to handle everything, the request is triaged by a Shopping Assistant agent—the senior orchestrator in this system.

A2A in Action

The assistant delegates subtasks via A2A to two peer agents:

- A Pricing Analyst, tasked with determining optimal pricing based on market trends.

- An Inventory Manager, responsible for checking real-time stock availability.

These agents, like domain-specific teammates, operate autonomously and respond via A2A messaging. No shared memory, no tightly coupled logic—just clean, structured collaboration.

MCP in Action

Each agent calls specific tools via MCP:

- The assistant uses the product_ranking_api to score and prioritize recommendations.

- The pricing analyst consults the price_optimization tool.

- The inventory agent queries the inventory_db.

These tools live behind an MCP server, abstracted as callable services, just like an API gateway for agents. They may pull from local data, cloud databases, or external APIs—the agent doesn’t need to know or care. It just sends the request and parses the response.

The Outcome

Within seconds, the customer is presented with:

- A top-ranked product list

- Smart pricing insights

- Verified availability with shipping estimates

All this happens through distributed intelligence, not one big model—but a network of communicating agents and callable tools.

Future of AI Projects: Choosing the Right Protocol

Choosing between MCP and A2A is increasingly becoming a strategic decision for organizations building AI ecosystems. As these protocols mature, understanding their strengths and applications will determine the success of your AI initiatives.

How to decide between MCP and A2A for your use case

When evaluating mcp vs a2a for your project, consider your primary objectives:

Choose MCP when:

- Your project centers on tool-augmented AI (like a chatbot accessing CRM data)

- You need real-time data integration for dynamic context

- You’re working with multiple LLMs requiring universal tool access

Choose A2A when:

- Building multi-agent workflows across different data setups

- Running enterprise processes that cross departmental boundaries

- Needing agents from different vendors to collaborate seamlessly

Additionally, many teams find value in implementing both protocols. As Google notes, “A2A is an open protocol that complements Anthropic’s MCP”. This hybrid approach enables powerful architectures where primary agents use A2A for task delegation while leveraging MCP connectors to access necessary information.

Composable AI and plug-and-play agent networks

The future of AI development lies in composability—creating systems from interchangeable, specialized components. Both protocols support this vision through different mechanisms.

MCP standardizes how applications provide context to LLMs, functioning similarly to a “USB-C port for AI applications”. This standardization simplifies integration and ensures reliable communication between AI agents and external tools.

On the other hand, A2A offers a discovery mechanism through Agent Cards that describe capabilities, skills, endpoints, and authentication requirements. This enables client agents to identify potential collaborators without manual setup—creating truly plug-and-play agent networks.

Risks of fragmentation and the need for standardization

Despite these advances, the AI ecosystem faces challenges from potential fragmentation. Currently, 16 states have enacted legislation related to AI and/or data privacy, with an additional 14 states considering similar measures. This patchwork of regulations creates compliance difficulties for companies operating across jurisdictions.

Moreover, competing protocols could lead to technical fragmentation. As one expert notes, “For something to become an industry standard, there needs to be mass adoption, to prevent the establishment of too many competing standards”.

Ultimately, successful standardization requires balancing innovation with interoperability. Organizations should advocate for open standards while remaining flexible enough to adapt as the ecosystem evolves.

Conclusion

Rather than competing protocols, MCP and A2A represent complementary solutions addressing different aspects of AI agent collaboration. MCP excels at providing structured context and tool access, while A2A enables seamless agent-to-agent communication across platforms.

Organizations building AI systems should evaluate their specific needs carefully. Projects requiring extensive tool integration benefit from MCP’s standardized approach, while complex multi-agent workflows demand A2A’s robust messaging capabilities. Many successful implementations combine both protocols, creating powerful hybrid architectures that maximize the strengths of each approach.

The future success of these protocols depends heavily on widespread adoption and standardization. Technical fragmentation poses a significant risk, potentially limiting the effectiveness of agent collaboration across different platforms and vendors. Therefore, supporting open standards while maintaining flexibility will prove crucial for organizations investing in AI agent technologies.

Ultimately, MCP and A2A mark the beginning of truly collaborative AI systems. These protocols lay the groundwork for composable, plug-and-play agent networks that will transform how organizations build and deploy AI solutions. Success in this new era requires understanding both protocols’ capabilities and choosing the right combination for your specific use case.

FAQs

Q1. What are the main differences between MCP and A2A protocols?

MCP focuses on providing structured context to language models and tool orchestration, while A2A enables direct communication between AI agents. MCP uses a client-server architecture, whereas A2A employs a task-based messaging approach.

Q2. How do Agent Cards work in the A2A protocol?

Agent Cards are public metadata files that describe an agent’s capabilities, skills, endpoint URL, and authentication requirements. They function like digital business cards, allowing client agents to discover and evaluate potential collaborators without manual setup.

Q3. When should I choose MCP over A2A for my AI project?

Choose MCP when your project centers on tool-augmented AI, requires real-time data integration for dynamic context, or involves multiple language models needing universal tool access. A2A is better suited for multi-agent workflows across different data setups or enterprise processes crossing departmental boundaries.

Q4. Can MCP and A2A be used together in the same project?

Yes, many teams find value in implementing both protocols. This hybrid approach enables powerful architectures where primary agents use A2A for task delegation while leveraging MCP connectors to access necessary information and tools.

Q5. What risks are associated with the adoption of these protocols?

The main risks include potential fragmentation of the AI ecosystem due to competing protocols and varying regulations across jurisdictions. This could lead to compliance difficulties for companies operating in multiple areas and challenges in establishing industry-wide standards for AI agent communication.